EBKEN10011: 32-Bit and Memory Management.

Introduction

There is a lot confusion about how much memory you really can use under 32-bit Windows.

Does it make sense to install 4 GB of RAM in your computer? Or even more? Is it better to remain below 3 GB? What’s about “your application doesn’t get more than 2 GB anyhow”?

When does swapping start? What’s it with fragmentation? Ever heard of “non paged pool”? What’s a “memory leak”?

I cannot possibly answer all these questions suiting everybody but I’ll try to get some misunderstandings straight and try to get the basic ideas across, so that questions and their answers might be understood a little better…

32-Bit And Bus Driving

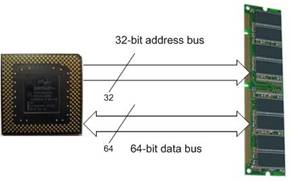

What does 32-Bit really mean? And what’s a bus? If you never have seen a computer inside out, that’s no problem, you wouldn’t have understood much about the underlying concepts – fig. 1 shows the basics.

|

Between your CPU and your memory modules there are basically 32 lines which are referred to as “Address Bus” and 64 lines, known as “Data Bus”. While in recent layouts these “buses” can look a lot different (f. ex. as “front side bus” or FSB), the basics behind it remained the same, so don’t get bother yourself with wild drawings about chipsets and the interior of the CPU ;-) |

fig. 1: CPU – Buses - RAM |

Now pay attention to the pointed arrows: the addresses only go from the CPU, while data can move to and from the processor. This indicates that the CPU puts addresses on the bus and, depending on read- or write operations, the data is put on the data bus from either the memory or the CPU.

Of course in real life there are a lot more units and signals involved, but that’s the confusing part and shouldn’t interest us right now.

Excursion Into The Past – And Some Technical Stuff

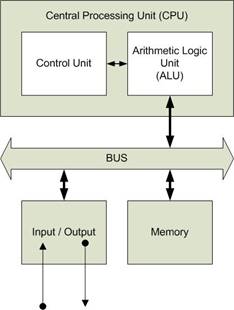

So why is the width of the address bus so important, that the whole Computer Generation is named after it? That’s because it’s one of the most limiting factors. The transfer of computing data to and from the CPU in satisfactory speed always was (and still is) the bottleneck of the whole architecture. To understand this stuff, let’s take a look at fig. 2:

|

fig. 2: von-Neumann Architecture |

Almost every modern computer is based on the so called von-Neumann-architecture, which defines a universal computing machine. It’s a “SISD”- class architecture meaning, it works sequentially (Single Instruction, Single Data).

It has been first described 1945 at Princeton University by John von Neumann, a congenial mathematician and early computer scientist.

And here’s the catch: every single Data (or instruction) must be transferred over a bus. The broader, the faster.

This behaviour has been named “von-Neumann’s bottleneck” because whatever you do to your CPU or your memory, the data still has to be pressed through a limited number of bus-lines. |

There are lots of Workarounds to this problem, different types of caching, parallel CPU’s, computing pipes, additional buffers and control units, splitting of address- and data bus.

But: the bottleneck can still be identified by the number of (address-) bus-lines. That’s why the PC Generation is named after this number.

You can see the limitation best, when looking back again into the eighties. Intel won the race for the “personal computer” by reducing the complexity – and the price - of their 8088 CPU (PC, XT), while Motorola kept their 68000 Chip (Apple, Unix) with lots of features still today considered “state of the art”.

The 8088 Chip internally worked with a 16 Bit-Bus, while only 8 Data Bits (or lines) where connected externally. Addresses could be put forward by 20 external address lines.

A Little Computing

Once you know the width of your Bus, it’s easy to calculate, how much memory your computer could make use of. Since we’re talking about the digital world, there are just O’s and One’s. Why? Because you can switch the light ON (1) or OFF (0) there’s no dimming the light in this world…

So, when you only have two states (0 and 1), that makes two (2). All the ON and OFF over, lets say 20, address lines makes 220 possible different states over all lines. If you type it into your calculator, that equals 1.048.576 or, in other words, 1.024 Kilo or 1 Mega. Did I forget the “Byte”? No, but when considering the 8 Data Lines, or Bits, of the 8088, that makes a

“1 Mega Byte” or in short 1 MB.

Considering our 32-Bit architecture adds up to 232 = 4.294.967.296 or 4 GB. This is the maximum of addressable memory for our CPU.

How come, we hear so much about more or less memory / RAM? To get a better understanding, we need to take a closer look into different types or “ideas” of memory.

What Memory?!

First of all, there’s physical memory, some kind of hardware you can take in your hand, smell or taste. But please, don’t take me literally ;-)

One short warning: when touching memory modules, take special care because the chips can be destroyed be static electricity. If this happens, it’s not possible to see it from the outside, only a memory test can make sure of full functionality.

So, before touching a memory module make sure you

- Don’t scuff over the carpets

- Don’t rustle with plastic bags or Styrofoam etc.

- Don’t put modules near strong static devices (CRT, TV, laser printer, Xerox etc.)

- Store modules only in antistatic wrapping

- Ground yourself before touching modules (grab some grounded metal like central heating, the computer case or something alike).

Physical Memory

Talking about “physical” means, you can actually touch it (with above warning in mind) and press it into the appropriate sockets of you mainboard.

If you’re not sure what and how much memory you need, alas I can’t tell. Depending on your mainboard and CPU, there can be several options. If you need PS/2, DDR1-3, SDRAMM, RIMM etcpp. depends on your mainboard specs. The speed is mainly defined by the clocking speed of your CPU, which must be set on the mainboard. Wether you need to fill banks of Sockets equally or if you are able to use single- and different modules is defined by the mainboard layout, too.

So, if you need information about that, better start reading the manual of your mainboard…

In this little ebook, whenever we mention memory, we use this phrase for different concepts (virtual vs. physical for instance), but in the physical way we refer ONLY to RAM, Random Access Memory. Every other type of storage (like harddisks, CD-Rom etcpp.) is not memory by means of the von-Neumann machine – it’s addressed via the Input/Output Unit.

What about Cache Memory?

While there have been computers in the past, that could be equipped with (especially fast) modules additional to the normal RAM, it’s of no relevance today. These Caches have long been integrated into the other circuitry, mainly the CPU. What is a Cache? It’s simply some way of buffering your data between two Units of different speeds. Basically it’s just one of the tweaks to widen the von-Neumann bottleneck. No more, no less.

How is my RAM used?

Since it’s THE memory usable by the CPU, everything from now on is a question of organization – i.e.: software.

A CPU by its own would be a dumb thing, a fast one with a certain accuracy, but plainly dumb. In figure 1 is a “Control Unit”, which gives it the needed senses. It contains the regulations needed to operate the CPU, sometimes referred to as “microcode” or “instruction set”. Here is the first part of software, bunt into the CPU. These are the basic instructions, the CPU can carry out. No matter what operating system, what application or equipment – this software carries it all out.

In this microcode we find our first routines, which organize the physical RAM. Since it’s far before the Boot sequence, there’s not even the BIOS (Basic Input Output System) involved. Just mentioning the BIOS, that’s the next step to load very basic routines into the memory, before the first bit of any Operation System (OS) is loaded. And this is the first explanation, why you never will be able to use all of your physical RAM out of your OS. And it’s also an explanation that this does not mean it’s wasted.

Leaving the simple von-Neumann Schematic behind, of course a modern computer has its own instances to control the use of the memory. The controller (itself a kind of specialized CPU if you like) involved in memory management is usually called “Memory Management Unit” or MMU.

While the MMU nowadays is integrated inside the CPU, the function remained the same. It translates virtual addresses to physical ones. What does it mean, what is it good for? Memory needs some organization, since many different instances put their instructions and data into it.

Some Programs are kind of simple and need to be put in specific areas of the RAM, others are loaded and freed again, data is loaded and unloaded and so on. In order not to be forced to regularly rearrange data in RAM, the MMU puts it anywhere and saves it’s address. Does an application request certain data, it uses its own (virtual!) addressing scheme. The MMU calculates the corresponding physical address or looks it up (translates) it, before the physical address is put on the bus.

In order to simplify address management, RAM usually is segmented into so called pages of a certain size. If an application needs memory, it gets a whole page allocated. Next time it gets its portion inside this formerly allocated space. This goes on, until the page is filled entirely – now the next page is allocated and so on.

About Fragmentation

Once we know about the reading and writing of data into the physical memory, it’s easy to understand fragmentation. Once continuous data has been loaded it might allocate a continuous area in RAM too, but when segments or pages in this space are freed again, these spaces are filled again with other data, possibly belonging to other programs. After a while this former continuous area looks like a patchwork with blank spaces in between. This is called fragmentation. It’s better known from harddisks, but the problem is the same with the difference, that there is no time for positioning a mechanical head in a RAM module. Here the addressing methods of the MMU determines, wether fragmentation is a problem or not.

Virtual Memory

When talking about fragmentation, we already found out that applications use another memory management scheme than the MMU with its actual RAM.

Since Software is programmed independently from the system it will run on, the development of it needs to be independent as well. To achieve this, the programming environment is based on the fundamentals: 32-Bit that is for instance.

32-Bit allows for addressing 4 GB as we found out earlier. Accordingly you can make use of up to 4 GB memory in your software. When this software is run, it doesn’t care about hardware à its memory is virtualized.

“Page Fault” is not an Error: Paging

Now how does this work, if a program actually allocates more RAM as is present in the machine it has been started on? - By Paging.

When explaining the MMU, we saw how memory is segmented into “pages” of certain size to make memory management easier. When the physical memory becomes filled up, from a certain threshold on, the MMU will start to move data out of RAM, which seems not to bee needed as often. It will be transferred to a space on a harddisk for example (in Linux it’s a “swapping partition”, Windows uses a “pagefile”). This space is organized similar to the RAM, having pages of the same size, which can be swapped between RAM and harddisk as needed. Because this process needs extra time, the computer becomes slower and, in the worst case, can be busy just with swapping back and forth – this is named “Trashing”.

On one hand, it’s best to have as much RAM as possible, on the other hand, every Program “thinks” it can use up to 4 GB itself and many programs add up to more than physically possible, that way you may never be able to work without any paging.

“Page Fault” is the exception, raised by the MMU when a virtual address is requested, which physical equivalent has been moved downstream. So it’s not an error, it’s the break signal for getting the needed data back into RAM before being able to continue.

Logical Address Organization

Virtual Memory of 32-Bit applications needs some structure. A program distinguishes between commands (“code”), fixed “data”, variables and parameters. Whenever a function (a kind of sub-program) is called (initiated), the main program needs to remind the point, where this happens. Without this point (or address), the function can never return to the calling program.

This “point of return” will be put on the so called “Stack” a memory area also called “FIFO” (first in, first out). Function after function is called and the calling point is saved on the top of the stack, like you put books on top of each other. When returning to each calling routine, the appropriate point of return lies on the top, the same way you would take the books from the stack to put them back into their shelf.

Other data (for example so called pointers) is allocated during program execution and put back shortly after. This will be allocated in the “Heap”, another memory portion, you would not like to get mixed up with your stack, your code- or data segment.

All these different data structures are neatly organized in different address areas of the possible 4 GB -- NO! STOP! TIME OUT! That wouldn’t work, BECAUSE…

…Kernel Mode vs. User Mode And The /3GB Switch

|

Because you never have just one program, there are quite a few applications running more or less to the same time (Operating System Kernel, drivers, applications). Whenever these programs need to share data (for example, your program wants to save data onto the harddisk) between each other, it needs to be done in a memory area, both programs can access, there needs to be some space addressable from every application where it can be also addressed by another program. This memory space is often referred to as “Kernel Mode”, since the OS – Kernel is in charge of this area. It can hold global variables and data structures, needed for overall communication.

This memory space has to be subtracted from the possible 4 GB, leaving an application to use only 2 GB in Windows or 3 GB in Linux or in Windows when using the “/3GB switch” in the boot.ini – file. The rest will be reserved for “Kernel Mode”.

And don’t forget: We are still talking about Virtual Memory. While the Kernel Mode area should be found by every application, it always resides in the same locations, but in physical RAM it will be found elsewhere, since the MMU translates these addresses to suite its needs.

|

fig. 3: Virtual Memory OS – space (“Kernel Mode”) is shared with Kernel and other possible programs, it always resides in the same address area. Stack and Heap are for dynamic memory allocations, they can grow and shrink during runtime. Once they crash into each other – you get something you might have already seen: a memory exception and the program will be terminated |

Paged and Nonpaged Pool

These two pools serve as memory resources for operating system and device drivers to store their data structures.

Several system limits in Windows depend directly on the sizes of these two kernel resources like handles, mutexes, maximum number of processes etcpp.

The kernels pool-manager operates similarly to the heap manager in user mode. One of it’s primary responsibilities is to make the most possible use of free memory regions in allocated pages.

Paged Pool

Nonpaged Pool

Can I Use 4 GB or Not?!

If you think about installing the most possible amount of RAM in order to boost performance, you might come across statements that your OS (no matter of Linux, Windows XP, Vista or Windows 7) can only make use of approximately 3 GB max. So why not install exactly 3 GB?

It’s not that easy to answer, but you’ll come to a conclusion. What happens, with you PHYSICAL memory upon boot time?

After the first initializations, the BIOS is loaded. What does it mean? The BIOS is read from non volatile (slow) memory (ROM – Read Only Memory) into RAM for qick access. The BIOS provides very basic routines which are used as or by drivers. For example in order to boot your operating system, you will need access to your harddisk. This is done by BIOS routines.

You can imagine, many many MANY operations during runtime are performed by the underlying BIOS routines – so you really want them in the fastest memory possible.

Later, while your OS is loaded, drivers and dedicated memory spaces are initialized for possible later used. All this has to reside in certain portions of the PHYSICAL memory. As long, as you use less physical- than virtual memory, your operating system shows the physical memory as if it were present and usable for applications. But we already now, half or 25% belong to the Kernel Mode. Drivers and BIOS routines are simply ignored, since the OS will be swapping memory pages anyhow.

When coming close to the actual size limit, it gets more difficult. The OS cannot ignore the reserved spaces in physical memory, so they will be spared out.

Once you install exactly 3 GB and your OS displays them as physical memory, it still has reserved space. If your video card for instance reserves its 256 MB in physical RAM, it does it, no matter if you have 3 or 4 GB installed, but the leftover 2.75 or 3.75 GB do make a difference.

When equipped with 4 GB and your OS displaying “only” 3.2 GB you just don’t see the hundreds of Mega Byte reserved by the system itself, but they will be used.

Another disadvantage of installing 3 GB could be (depending on the mainboard layout) an asymmetric access mode for your RAM. Usually the more symmetric and the more homogenous your memory banks will be used, the better your system can take advantage of it.

So if you ask me: with today’s prices for memory modules – definitely YES. Install as much as your OS and your mainboard support (as long as you can afford it).

More than 4 GB With 32-Bit?

You might have heard about 32-Bit systems able to use more than 4 GB. How come? We learned a lot about addressing, but always there was this capacity limit based on the number of address lines.

Now it should be easy to understand: most applications “live” in their own virtual world, address space is continuous and belongs to them, just like everyday people live in their small towns. They don’t care much about the universe and the speed, their own home planet is racing around the sun. Same with little programs, they don’t “know” or care about where their pages reside – RAM, harddisk or wherever…

PAE! – “Physical Address Extension”

All you need is a mechanism, which allows virtual address spaces to be moved elsewhere – and of course – you need the appropriate hardware. This explains, why Microsoft OS’ses do not offer this functionality in Desktop or small Server operating systems, unlike Linux.

Where’s the catch? We already found one: you need the appropriate hardware. Not only the RAM modules, but also a mainboard suitable to support Address Extension (Slots, Caches, BIOS-Routines,…) and a CPU (remember, the MMU is a part of modern CPUs).

When we all got this together, we have to choose an operating system, capable to make use of all these features and PAE needs to be enabled too.

Another problem can result from drivers. Drivers are little programs dedicated to certain tasks, mainly to enable I/O Operations to specific hardware. Drivers are most commonly associated with printers, scanners, USB-devices, cameras, storage, communication devices and so on.

And every one of us for sure has sworn about them – many drivers do not always function the way they should…

Where is the coincidence with PAE? Some of these little buggers “think” they are smarter than the everyday applications, because they know about the universe, meaning that some drivers work with physical memory.

Once you get trouble with drivers or BIOS routines, it can have bad results for your overall system. Since it’s a little outside the normal 32-Bit world, you might not the vendor support, you might need.

But wether PAE makes sense or not should be discussed elsewhere.

Help! My Memory is leaking!!!

Help! My Memory is leaking!!!

What does this mean? What can I do about it?

We learned a lot about virtual memory and how it’s organized. When an application uses dynamic memory space (function calls, threads, pointers, arrays,…) the appropriate space needs to be allocated. Once it’s not used any more, the application has to take care of freeing this space afterwards (fig. 3 – Stack and Heap). If this does not happen, over time more and more memory can be locked. If you are lucky, you just need to close the faulty application. If it’s worse, you might need to log off and on or even restart.

In some cases, it’s an application not accessible in user mode. With real bad luck your system might even freeze up or get a system crash. Especially faulty drivers can cause such severe problems.

First thing to check on this is to monitor memory usage over time. Usually your operating system contains one or more tools to display memory usage (for example “Taskmanager” in windows).

If it’s deep in one of your system- (kernel-) drivers, it might be harder to discover. In these cases, you might need vendor support or you’ll have to circle in with try and error.

Wrap UP

By now you should understand the context, the phrase “memory” is used in. Some Support Threads end up in useless discussions between people who mix up physical- and virtual limitations, 32- and 64-Bit.

It’s not the first sonic wall, 32-Bit replaced the 16-Bit world years ago. With time, 32-Bit applications, drivers and operating systems will become less important and we will enjoy new and fast systems for a while – until we hit the next sonic wall.

But if it will result in 128-Bit von-Neumann systems or in completely new machines with optic processors and holographic storage devices built by nano robots– who nows?

Disclaimer:

The information provided in this document is intended for your information only. Lubby makes no claims to the validity of this information. Use of this information is at own risk!About the Author

Author: Ingo Bock - Keskon GmbH & Co. KG

-

Latest update: 27-08-2022 | Comment: